Assessing Memory Safety in Programming Languages Like Rust and Go

Can These Languages Eliminate Memory-Handling Vulnerabilities for Programmers?

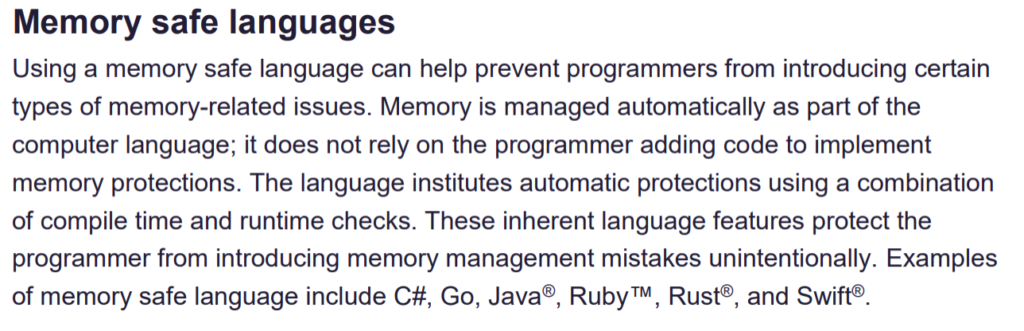

Much has been made recently of the memory safety provided by programming languages like Rust and Go. These languages have been designed to eliminate some of the language weaknesses that make it so easy for C and C++ programmers to write vulnerable software. These memory-safe languages are seen by many to have significant promise for helping programmers write code that is largely free of critical memory-handling vulnerabilities.

In this post, we will dig into a paper called Unsafe’s Betrayal: Abusing Unsafe Rust in Binary Reverse Engineering Toward Finding Memory-Safety Bugs via Machine Learning and it comes out of the Systems Software & Security Lab (SSLab) at Georgia Tech.

But first, let’s discuss more about what Rust is and what it’s designed to do for memory safety.

What Can Rust Do – and What Can’t it Do – When it Comes to Memory Safety?

Before going all-in, however, we should talk about the problems Rust solves and the ones it doesn’t.

One of the primary goals Rust aims to achieve is the elimination of memory safety problems. This goal is a significant security win because many of the most critical software vulnerabilities are related to memory safety issues. However, the Rust programming language doesn’t inherently solve all software security problems.

- It doesn’t ensure that all the open-source packages in your project’s dependency tree are secure – or even non-malicious.

- It doesn’t ensure that attackers haven’t inserted malicious code into your repository.

- It doesn’t prevent your programmers from doing bad cryptography.

- It won’t prevent authorization vulnerabilities.

- It won’t protect you from vulnerabilities in C libraries that your Rust project relies on and links to.

The list of possible ways to create vulnerabilities in Rust without creating memory safety issues is endless. At a high level, using Rust does not mean that the job of software security is done. But in another sense, with nearly 70% of the security patches in large software projects like Windows and Chrome being designed memory safety vulnerabilities, the inclusion of Rust could be a significant advantage.

Neither Windows nor Chrome are written in Rust (yet). But there is a lot of Rust code in Android – and it makes up almost 20% of the codebase in Android 13. Plus, according to a recent Google Security blog post, there have been zero vulnerabilities identified in Android’s Rust codebase to date.

From these points, we know memory safety is important even though it is not a cure-all for AppSec, and we know Rust can help us write memory-safe programs.

What is Rust, Really? Breaking Down Safe Rust and Unsafe Rust

Rust is really two programming languages. One of these language is called Safe Rust, and this language attempts to make it impossible to perform unsafe memory operations. This functionality is kind of awesome, but can make it hard to write high-performance code, and can make some kinds of computation awkward or even impossible to write.

To provide some relief for programmers who need high performance or hate awkwardness, there is a second language called Unsafe Rust.

It is entirely possible to create code in Unsafe Rust that has memory safety weaknesses and introduces critical remote code execution vulnerabilities into programs, which obviously is not great. We want a language to help us write code that has no memory safety vulnerabilities, and instead we get Safe Rust plus a second language specifically created for writing unsafe memory code.

It’s not ideal, but we still get a huge benefit over C and C++ – all the unsafe code is marked! In the case of Rust, it lives inside a block of code labeled “unsafe”, which makes it easier for people reviewing code for memory safety issues to know what to focus on, and makes it easy for static analysis tools to find the most unsafe code. And, as we will see in the paper to be reviewed here, it is also possible for binary analysis tools to identify unsafe functions by looking at the compiled code even when the source code is unavailable.

It’s important to keep in mind that just because code is unsafe doesn’t mean it contains any bugs, memory safety vulnerabilities, or other security issues. It is entirely possible to have unsafe code with no vulnerabilities at all. What we give up when we use unsafe code blocks is Rust’s attempts to guarantee that there are no memory safety failures. Now, instead of relying on the programming language to enforce safety, we are relying on the programmer to get it right.

A Deeper Dive into Unsafe’s Betrayal: Abusing Unsafe Rust in Binary Reverse Engineering Toward Finding Memory-Safety Bugs via Machine Learning

The “Unsafe’s Betrayal” paper shows another benefit of Rust’s separation of code into memory-safe and unsafe compartments. The SSLab authors created a tool called rustspot that can recognize which functions in a compiled Rust binary contain unsafe code. This functionality makes it easier for analysts to find unsafe memory vulnerabilities because they can focus on the functions that contain unsafe code and spend most of their fuzzing or reverse-engineering time focused on the functions most likely to have vulnerabilities. While this functionality appears to be the intended goal of the tool, I believe even regular Rust programmers could use a tool like rustspot to help understand the level of unsafe code their programs have because even if the code you write contains 0% unsafe code, there is not currently any reliable tool to identify all the unsafe code in your program’s dependencies.

One huge problem in modern software security is the prevalence of third-party software, often making up 80-90% of a program’s codebase, published onto the Internet by unknown parties with unknown (but typically $0) security budgets. Even if your Rust program has no unsafe code, it is entirely possible (in fact it is highly likely) that vulnerabilities may be introduced by libraries in its dependency graph that do have unsafe code.

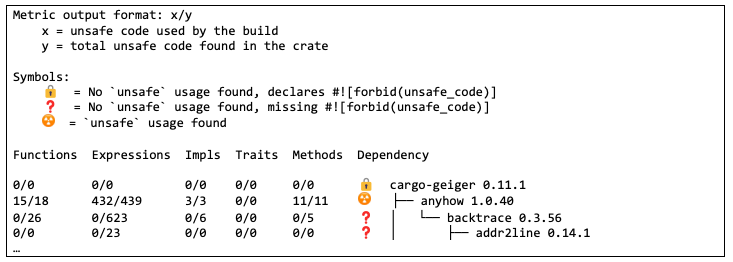

For software projects with strong security requirements, understanding how much of the codebase is unsafe code should be a requirement. One tool that can go a long way to identifying this code is cargo geiger. The cargo geiger command can look at the source code of a Rust crate and of all of its dependencies and point out where the code marked “unsafe” is. It produces output that looks like this:

Reviewing cargo geiger output for many crates will show a great many dependencies that have unsafe code. The geiger crate and its dependencies use 200 functions with unsafe code, which should be quite eye-opening when thinking about memory safety in Rust programs.

Cargo geiger is not part of the default Rust installation, but it can be downloaded and installed and it provides useful information about unsafe code in your project’s code and third-party dependencies. However, cargo geiger has some known issues – unsafe code inside macros is not detected, or unsafe code generated by a crate’s build.rs. Projects with strong security requirements might well be interested in other ways of identifying unsafe code, as a second source of information, to build confidence. The tool from the current paper, rustspot, could provide this information when it is released.

How Does rustspot Work and What Did the SSLab Team Do?

Rustspot has several interesting use cases, both for attackers and defenders, derived from showing what code in a given Rust binary was generated from unsafe code blocks. So, how does it work?

The rustspot tool was developed by training a machine learning system. Rustspot is a classifier that can look at a block of generated machine code and classify which ones originated from Rust functions with unsafe blocks. Machine learning classifiers are often trained on labeled data, which is the case with rustspot. There is a large amount of open-source Rust code, some of which includes code in blocks marked as unsafe. If we compile a large amount of available code and label the output with whether the original source code included unsafe blocks, we have an ideal training dataset for a machine learning model. These are the actions the SSLab team took.

They further trained their classifier using RustSec advisories. They identify 120+ documented memory safety vulnerabilities in the RustSec dataset of vulnerabilities in Rust crates, and labeled the vulnerable codebases where the vulnerabilities originated, as well as the type of memory safety vulnerability. They then compiled the vulnerable functions to create a dataset that maps Rust functions with memory safety security issues to binary code. They further trained the classifier on this data, helping fine-tune the Unsafe classifier and adding some pattern-recognition ability to identify actual vulnerabilities, as well as just finding unsafe code blocks.

After training, they tested their work. The rustspot classifier was run against many crates, and the functions the classifier identified as originating from unsafe blocks were fuzzed using cargo fuzz. Comparing this activity with a naïve approach to fuzzing all functions in a crate, it proved to reduce the amount of time required to find bugs by around 20%.

Takeaways from the SSLab Research

For vulnerability researchers, the key takeaway is that identifying unsafe code can in binaries can help narrow the scope of fuzzing and reverse engineering, saving time when analyzing Rust binaries for vulnerabilities. Most of the useful vulnerabilities in Rust code are going to be in unsafe blocks, and rustspot makes it easy to find them, even when you do not have the source code. While the tool is not currently publicly available, the SSLab group has a history of posting tools to their Github organization, and they have informed me they will be posting it once their paper is accepted.

For application security and engineering organizations, the most important takeaway is that Rust programs can absolutely have memory safety vulnerabilities. They can even have vulnerabilities when none of the code in the program is marked unsafe (if it uses dependencies with unsafe code and they have vulnerabilities). Rust’s memory safety “guarantees” are only in effect when there is no unsafe code in the project or its dependencies, and many projects have lots of unsafe code in their dependencies.

While the Rust programming language can create very safe executables, the default seems to be to include hundreds or thousands of functions and expressions with unsafe code. Therefore, it is critical for engineers and AppSec staff working on high-assurance Rust projects to understand where all the unsafe code lies and make sure it is thoroughly reviewed and fuzz tested.

One existing tool that can help with this review and testing is cargo geiger, an open source cargo subcommand that enumerates all the unsafe code blocks in a crate and its dependencies. However, it does have some blind spots. When the SSLab team releases their rustspot tool, which looks for unsafe blocks in binary code, it would be another important component to use that might highlight functions that are in cargo geiger’s blind spots.

Back

Back