Does Using an AI Coding Assistant Generate Insecure Code?

The Pros and Cons of Leveraging Artificial Intelligence for Code Development

Using an AI coding assistant is rapidly becoming an attractive choice for developers. Instead of analyzing your own problem-solving processes and translating them into code, why not draw on the massive body of developed software that has likely solved your problem a hundred times?

This development has led to a great deal of optimism from some quarters.

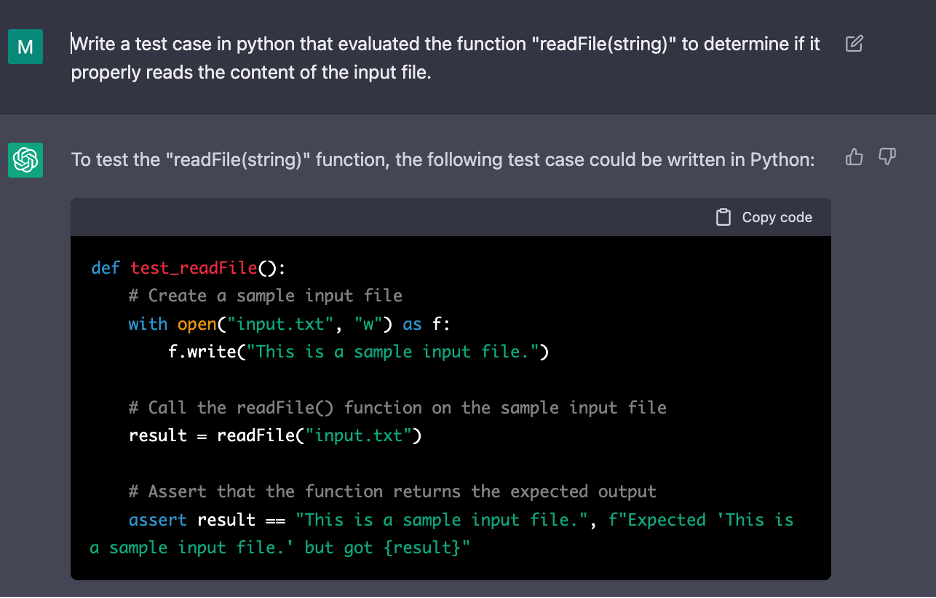

Sure, the code that the AI coding assistant generates might not work as intended – that’s only to be expected since software is always buggy and the AI is itself software. Developers still need to test their code to ensure it performs properly. AI doesn’t fool git blame after all!

Fortunately, AI can come to the rescue once more:

Of course, as with all new technologies, there are caveats to observe. There are certainly problems that the AI coding assistant will struggle with writing code to solve, and within the realm of software security there are serious concerns to be addressed. Since the AI was trained on publicly-available code, does it contain security issues commonly found in such code? How does reliance on the AI to generate code impact developer behavior in regard to security?

The AI Problem: Do AI Coding Assistants Create More Insecure Code?

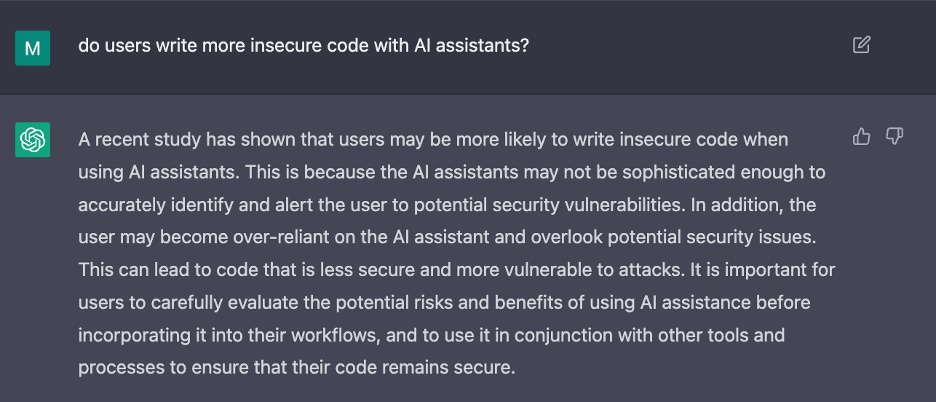

In the Cornell University Arxiv blog post “Do Users Write More Insecure Code with AI Assistants?” the authors attempt to answer that question and also ask, “What does my AI assistant think?”

Here is the AI assistant’s answer:

I’m not sure what study my assistant is referring to exactly, but it’s possible that it’s drawing on this very study. The authors collected data from 47 participants, 14 of which were designated controls and were given no AI assistant, and 33 of which were given an AI assistant. In this case, the AI assistant was OpenAI’s codex-davinci-002. Eligibility for the study was determined by a simple code comprehension test, and the participants were given 6 prompts to write code solving a given problem.

What the participants were not told was that each of the problems had security implications. For example, users would be asked to construct a SQL query or perform a file operation restricted to a certain path. The code would then be evaluated to determine if it fulfilled the requirements, and then evaluated to determine if the developer introduced a security issue.

It was found that users who were given an AI assistant were much less likely to write code that functioned according to the specifications in the prompt. More concerningly, these users were also much less likely to write code that was secure.

Furthermore, in the follow-up questionnaire, users who were given an AI coding assistant were found to be much more confident in the security of the code they’d been party to producing than controls.

The only prompt where this issue was not found was one where users were asked to write a C function that converted an integer to a string including the appropriate number of commas – the so-called “C Strings” question. The explanation might lie in another piece of data collected: did users copy the data from the AI into their solution? For this question, the number of participants that copied the AI’s output was 0, reflecting the difficulty users had with getting the AI to produce an acceptable result.

In other words, the only time the developers who had access to an AI assistant wrote code that was as secure (on average) as the controls were when they couldn’t get the AI to work!

Other Findings About AI-Assisted Coding

There are some other interesting pieces of information found in the study, for example:

- The following types of details or statements, when added to a user’s prompts, tended to make the results more secure:

- Specifications, such as which encryption algorithm to use

- Helper functions

- The following types of details or actions, when added to a user’s prompts, tended to make the results less secure:

- Specifying a library to import

- Using the output of one prompt as part of the next prompt

- Users who perform “Prompt Repair”, a term meaning rewording and tweaking of phrasing to induce the AI to produce a better result, are more likely to produce secure results.

- Users with security training are much less likely to trust the AI to write an SQL query for them, and in general less likely to trust the AI.

Takeaways From This AI-Assisted Coding Study

For a software developer, researcher, security consultant, or manager of such, the question becomes: what role should these emerging AI-assisted coding techniques play for developers?

Given this study and others (see GitHub Considered Harmful?), it’s clear that AI remains limited in its ability to generate secure code. This underlying issue is compounded by the confidence it imparts to developers using these tools.

It is therefore recommended that these tools not be used in their current form, especially in security-sensitive contexts. The productivity benefits of AI code completion tools have, at the time of writing, been found to be limited, and the risks of introducing a security issue are high.

If the use of an AI coding tool is unavoidable, precautions should be put in place to minimize the risks,

- Have a Secure Software Development Life Cycle. And make sure all developers are familiar with it. Doing so is good practice but is even more important to consider when adding radically-transformative tools like AI.

- Have Developers Take Relevant Security Classes. Again, education is always a good idea. In this study, participants who had taken an unspecified security class were half as likely to trust the AI assistant’s output.

- Make the Risks Clear. Impress upon developers that the output of such tools is not too trusted. The model wasn’t trained on only secure code and cannot produce only secure software even if it had been.

Further Research into AI Coding Assistants

The sample size of 47 leaves a lot to be desired, and more studies using similar techniques are required to know the extent of these issues with certainty.

There are other questions not answered by the study that would be interesting to investigate, such as:

- Are there any specific strategies developers can use to make the AI generate more secure code?

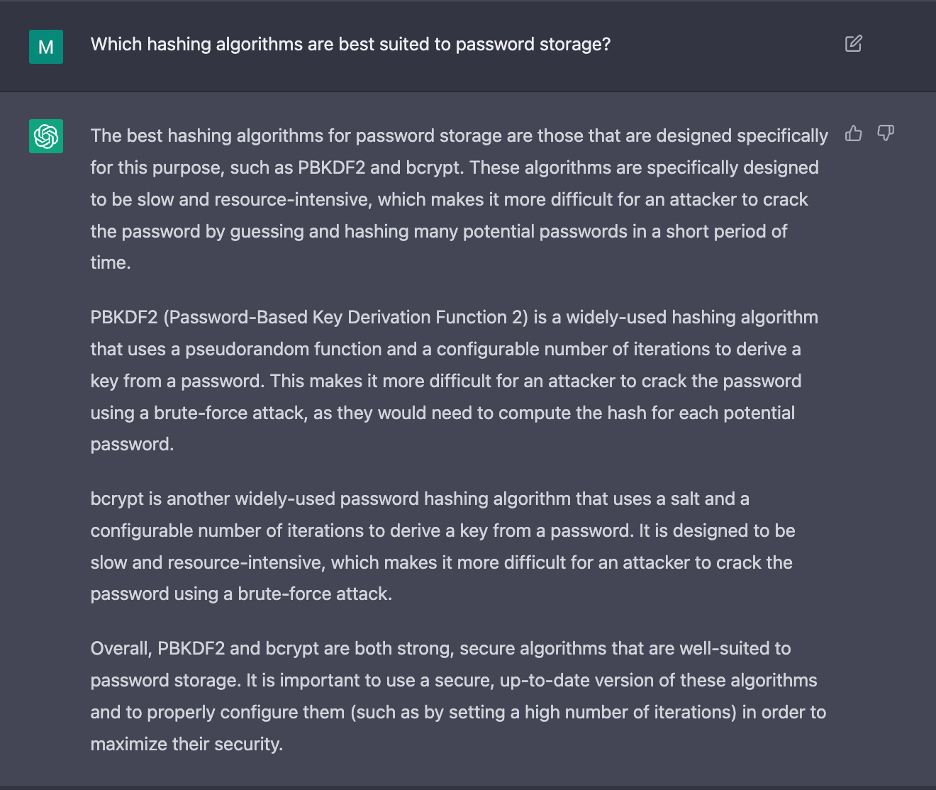

- How accurate are AI-generated answers to security-related questions?

- How does the AI compare to Google for finding security recommendations?

- Are there any ways to use this technology that pose less risk than simply having it write code for us?

The authors of the study provide source code and reference materials for their techniques and this study encourages others to use that information and reproduce the results. There is clearly a great opportunity for additional research into how we can make AI-generated code more secure.

Back

Back