How to Prevent Exploitation of Amazon S3 Buckets with Weak Permissions

Identify the Common Ways S3 Buckets Can be Misconfigured

According to 2017 report, 93 percent of organizations are using some form of cloud computing. This increase brings new threats that many organizations’ current security processes may not be equipped to handle.

One of these issues is the use of Amazon S3 buckets (AWS S3) with weak permissions. Recent tools and awareness among attackers have accelerated exploitation of misconfigured S3 buckets. Misconfigured S3 buckets have been key factors in recent breaches at Alteryx, FedEx, the National Credit Federation, and even the United States military. The root causes of S3 bucket misconfigurations are a lack of oversight of cloud computing use and a misunderstanding of how to identify vulnerable S3 buckets.

Here’s an overview of the common ways S3 bucket permissions may be misconfigured and how to identify them.

The Two Levels of AWS S3 Permissions and Associated Security Risks

The buckets and the objects in the buckets are the two levels of AWS S3 permissions. Permissions on both buckets and objects can belong to owners, specific users, or groups of users. The most common misconfigurations result from who is allowed access to a resource.

Often, any user on the Internet can access a resource with no authentication. Even restricting access to only authenticated users creates security issues, as it includes anyone on the Internet with a free AWS account.

S3 buckets can create objects, list objects, read permissions, and write permissions. Permitting everyone or authenticated users to list objects or read permissions may be sensible. Allowing anyone except for specific users or groups to create objects or write permissions is extremely dangerous. An attacker could upload illegal content to the S3 bucket or cause availability issues by restricting access necessary for application operation.

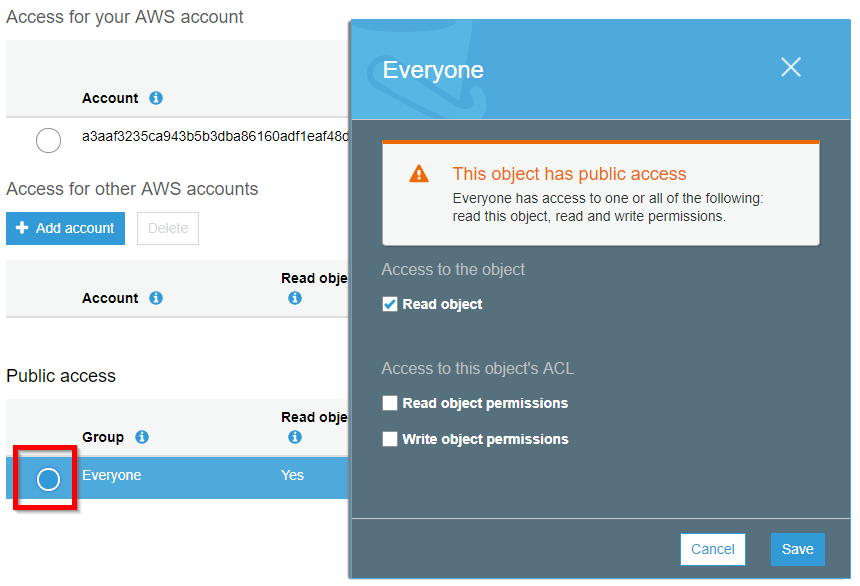

Object permissions may be read object, read permissions, or write permissions. Read object allows the authorized users to download the object. It can be useful to allow the “everyone” or “authenticated users” groups to read an object. Administrators should take care that neither of these groups can read any object that contains sensitive information, as this is the most common way that S3 buckets leak information to attackers.

Creating Weak S3 Permissions

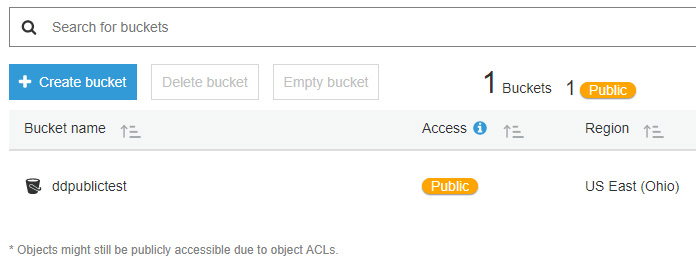

When a bucket is listed as “Public,” it means that any user on the internet can list the contents of that bucket. Allowing public users to write files is possible, but is not the default when allowing public access. The following screenshot shows how a bucket with public settings appears in the AWS console.

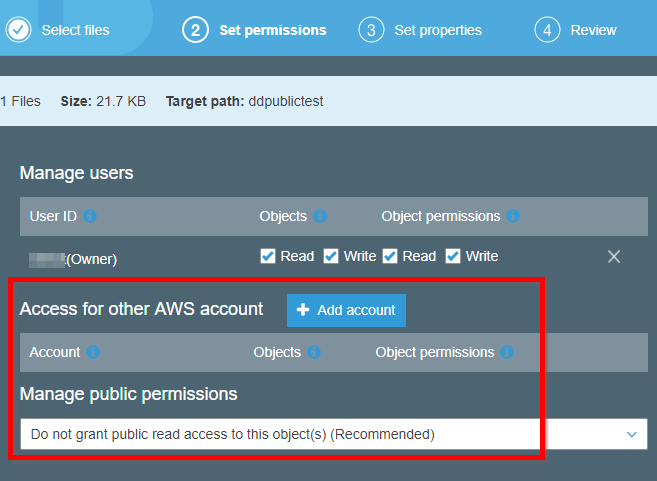

Individual files within the bucket may have different permissions. In the console, the file permissions may be assigned during a file upload, as the screenshot illustrates.

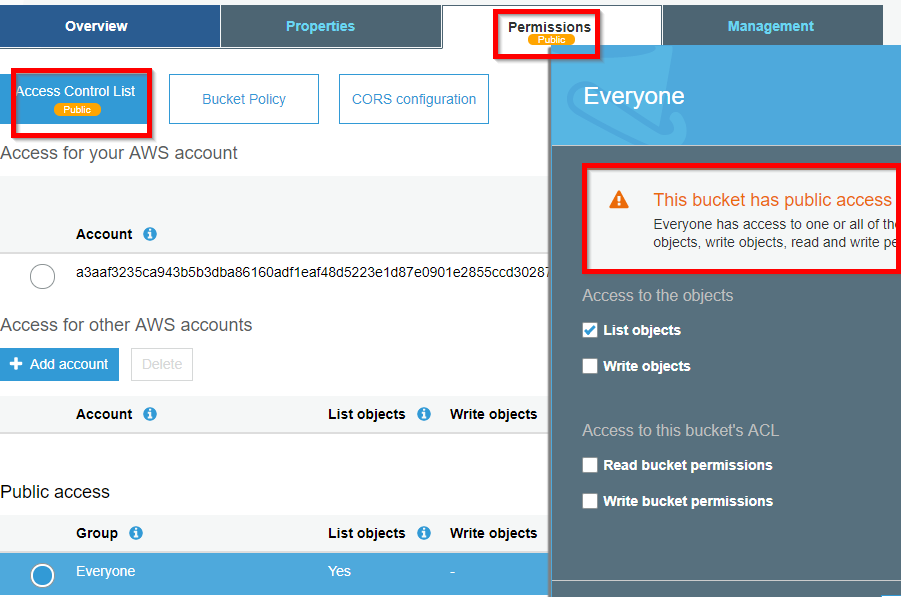

Despite the bucket’s public permissions to list files, only the owner may view this file. So even though a bucket is listed as public, it does not necessarily mean that the files in the bucket are also public. If the AWS administrator attempts to configure public permissions on a bucket, the AWS console issues a dire warning, as depicted below.

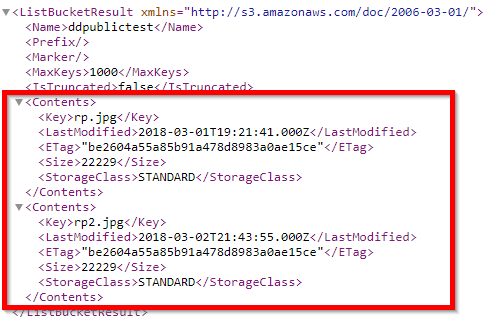

Accessing the ddpublictest S3 bucket in a web browser displays the following XML that indicates two files are listed in the bucket, but does not denote that only one of these files is public.

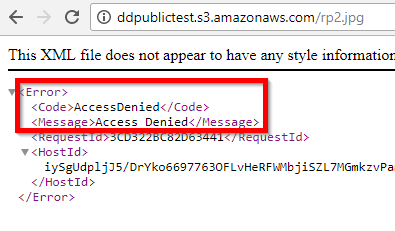

Verifying public access is simple, since the requestor does not need to provide any authentication headers. Requesting the file in a browser is sufficient to test for public access to a file. When a file is not public, the server responds with XML indicating that the request is denied, as follows.

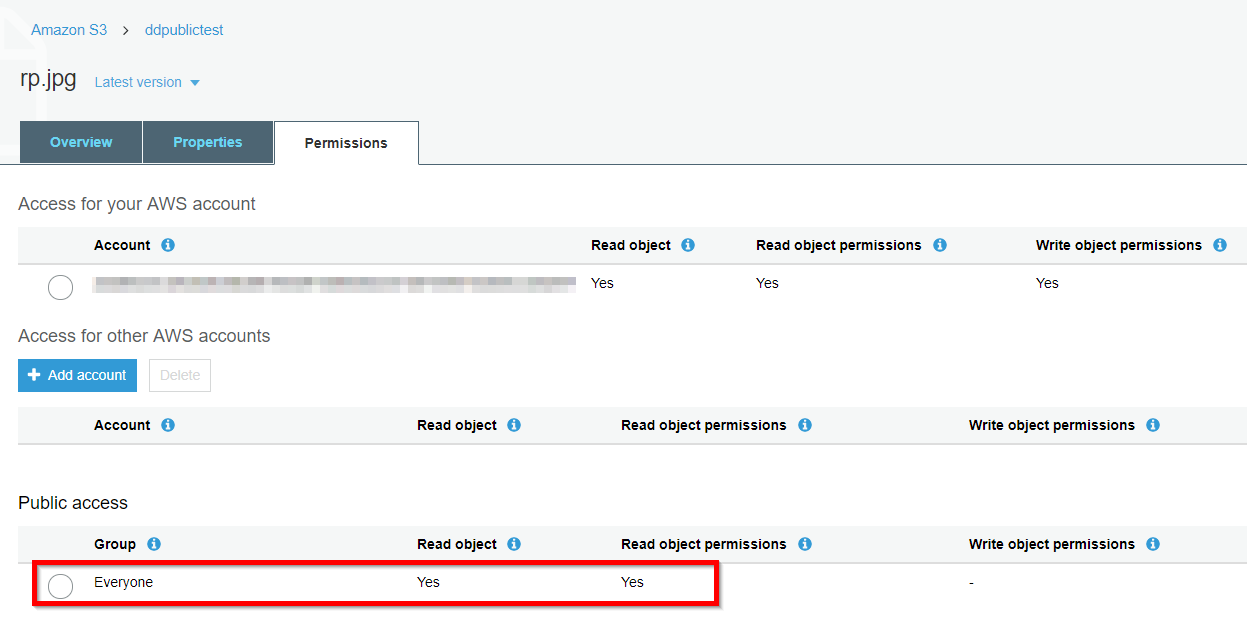

After configuring public permissions on a specific file, the permissions tab shows that everyone can read the file and its permissions.

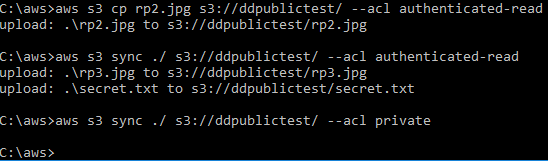

Making public buckets and files with public permissions within the AWS console results in clear warning banners denoting the danger, but this is not the case when using the AWS command-line interface (CLI). The following command uploads the rp2.jpg file to our S3 bucket with permissions that allow any AWS user to read this file.

![]()

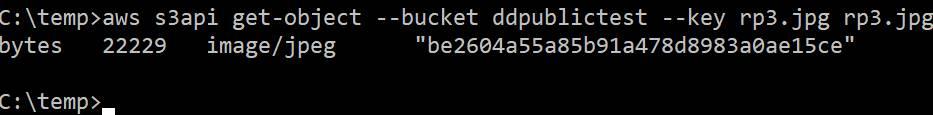

Visiting the file in a web browser, even while logged into the AWS console, receives the “Access Denied” XML response as though the file were set to non-public. S3 bucket scanning tools often mis-identify these files as private. A completely unrelated AWS account can download the files using the s3api get-object command to pass credentials, as shown in this screenshot.

The AWS sync command further complicates permissions management. By syncing the entire local directory to the S3 bucket, permissions are set on all new files. This command will not modify permissions on files that are already located in the S3 bucket. In this example, permissions are mistakenly set with the authenticated-read ACL. A second sync command is run to only allow the owner to have any rights to the files, but this action has no effect.

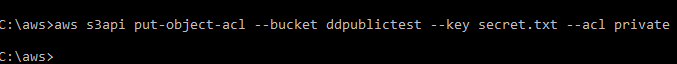

The appropriate method to modify permissions on an existing file is to use the AWS s3api put-object-acl command as follows

Identifying Misconfigured Buckets

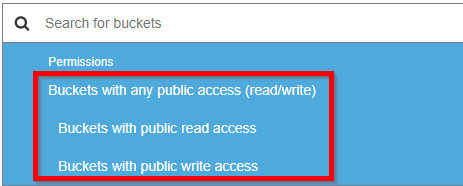

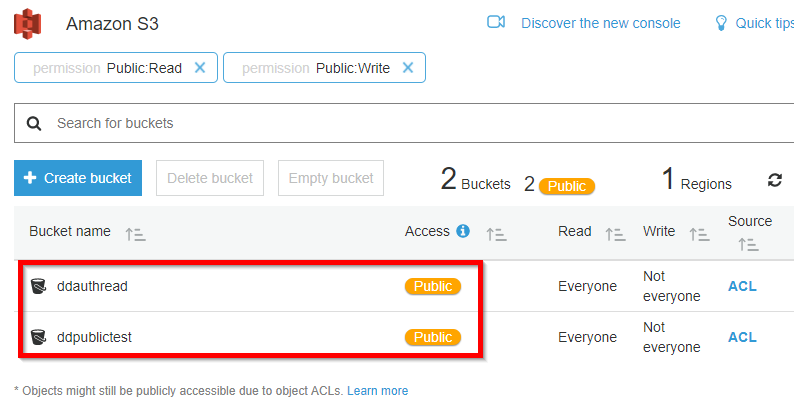

Amazon made recent improvements in the AWS console to display warning banners for all resources that are available to the public or to all authenticated users. Amazon has also enabled a feature to search for public buckets, as shown below.

This search correctly identifies buckets with list permissions available to everyone or authenticated users.

However, this search does not identify buckets without public list permissions that have public files. The ddprivateplus bucket does not allow list permissions, but does contain a single file that is readable by everyone. There is no immediately obvious warning regarding the public files. The AWS administrator must click on the “everyone” group’s permissions before the warning is displayed.

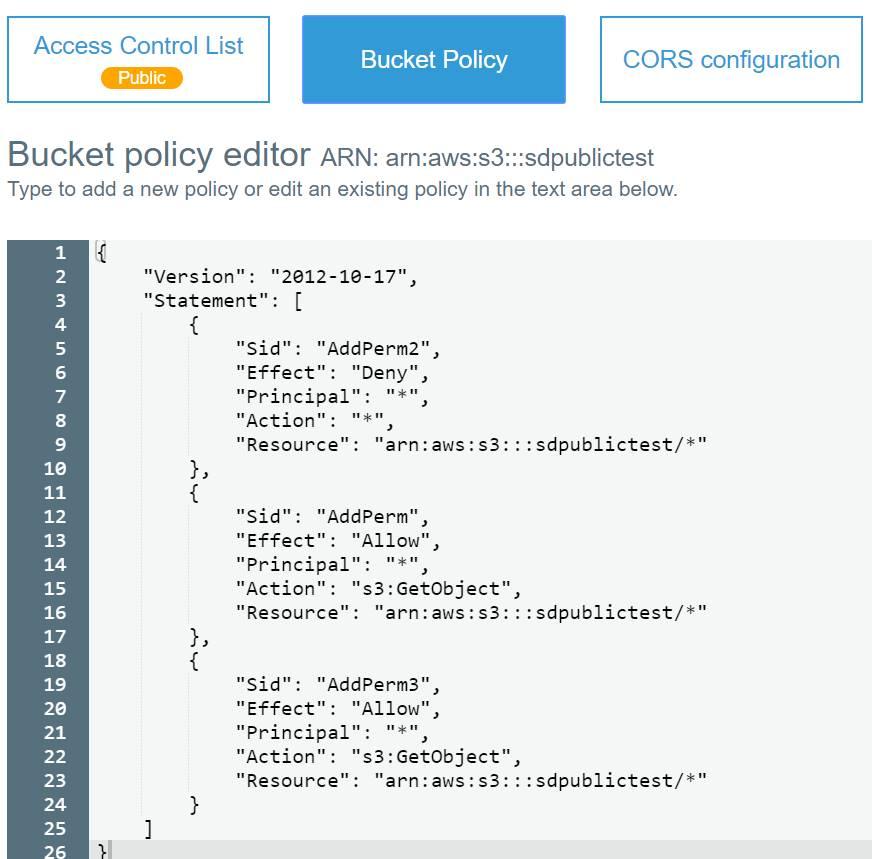

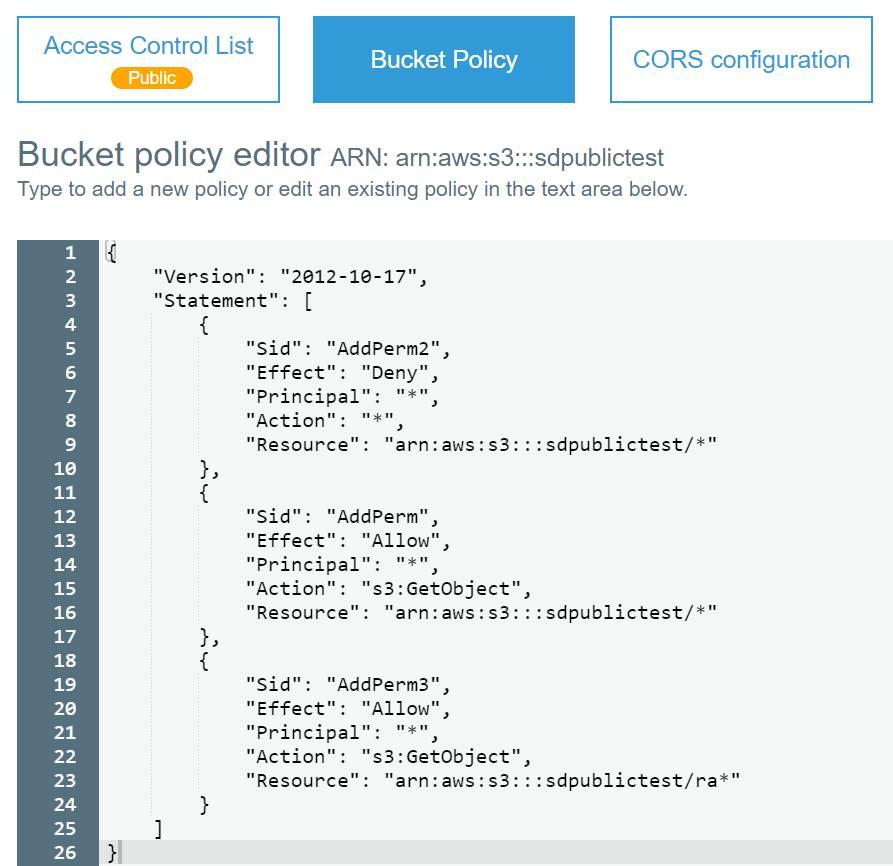

Bucket policies further complicate the issue of determining which objects within buckets are public. When a policy is in effect, it overrides any access controls on an object. In the bucket policy, there are five parts to the policy object, Sid, Effect, Principal, Action, and Resource. The Sid is simply an identifier for the policy object. The Effect controls if access is allowed or denied to the specified resource. The Principal is which users are covered by the policy. The Action defines what permissions the policy grants or denies. The Resource defines which objects are covered by this policy object. When the policy is evaluated, a more specific entry will override a more generic one. So, a policy object specifying the exact ARN of a resource overrides a policy that targets all objects in a bucket.

The following screenshot shows a bucket policy for a bucket marked public with two files, ra.jpg and rp.jpg. With this policy, neither object can be accessed directly. A “Deny” effect always overrides an “Allow” effect given the same resource. The order of the policy objects is irrelevant.

The following policy will allow unauthenticated access to all resources beginning with the characters “ra” which means that the ra.jpg file is publicly accessible, but the rp.jpg file is not. Since the arn:aws:s3:::sdpublictest/ra* resource is more specific than arn:aws:s3:::sdpublictest/* resource, the AddPerm3 policy supersedes AddPerm2.

While bucket policies may be a powerful way to implement complex authorization schemes on S3 buckets, it greatly complicates the process of determining object permissions. When bucket policies exist, the most accurate and expedient method of checking for public permissions is to use an AWS account with no explicit rights to the S3 bucket and request all objects in the bucket.

Permission Checking Automation

From an audit perspective, it’s difficult to quickly search an S3 bucket for all files with weak permissions. Amazon does have a blog entry that instructs how to automatically detect and remediate weak permissions using CloudWatch and AWS Lambda. Since this method relies on CloudWatch events firing, it is a poor audit control. Only S3 objects that have been requested by a user would be detected by this approach, which may not be every object in the bucket.

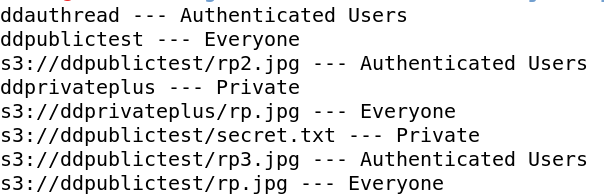

Unfortunately, neither the AWS CLI nor the AWS console quickly display if a bucket has weakly-permissioned files. Administrators must click through each file and examine the permissions or manually check file ACLs on each object. Fortunately, Amazon provides the S3 API libraries for many programming languages. This makes it possible to check object permissions across an entire AWS account very quickly. In this case, a Node.JS script uses stored AWS credentials and the S3 API to check each S3 bucket for weak permissions, and then checks each object in all the buckets to determine if the file is either accessible to everyone or accessible to authenticated users. This script is available on GitHub. Below is the script output.

Administrators must view each file to determine whether the permissions are appropriate depending on the file contents and company policies. However, this is a much faster process than manually reviewing permissions.

To safely use S3 buckets, correct permissions are critical. Administrators should regularly review permissions on both S3 buckets and the objects stored at S3. Amazon has made improvements in the AWS console to help identify weak permissions within S3, and will likely continue to increase visibility on these misconfigurations. Until Amazon provides a simple method of checking object permissions en masse with S3, basic scripting and programming skills can fill the void. Administrators still need to verify that only non-sensitive information is stored with open permissions.

Back

Back